Cognitive Cartographies

Machine Learning & the Participatory Plane

Interface

This project positions itself at the nexus of computational design, geospatial analysis, and community-centric visualization tools. By integrating machine learning pipelines and accessible web interfaces, the ongoing work seeks to democratize satellite imagery analysis and 3D terrain modeling, bridging complex data science tools with public participation. The platform under development transforms satellite imagery into processed visuals, enabling interactive exploration of color patterns, edge detection, depth mapping, and 3D contour modeling. This combination is intended to culminate in community-augmented landscape transformations. Early results highlight the potential of these methods for participatory urban planning, community-driven projects, and broader environmental assessments, offering a new perspective on public engagement through technology.

“The interface turns passive information into active participation, like a ‘digital post-it’ exercise.”

From GIS Expertise to Public Empowerment

Traditional geospatial analysis has long required advanced knowledge of complex software like ArcGIS, which creates a barrier between data and broad public access. This research aims to reimagine that access by developing a tool that uses computer vision and deep learning to simplify satellite image interpretation and processing. By focusing on participatory design, this platform intends to shift the user role from passive observer to active participant, allowing users to annotate, modify, and visualize changes in their local landscapes. This approach fosters an inclusive dialogue that empowers users to contribute meaningfully to urban and environmental planning. The hypothesis underpinning this project is that by democratizing access to satellite image processing, communities can engage in informed, collective action, potentially driving more resilient and contextually relevant urban interventions. This shift challenges traditional power dynamics in urban design, positioning local communities as co-creators and advocates for their environmental surroundings.

Bridging Technical Complexity and Accessibility

The research builds on well-established image processing and deep learning techniques, integrating them into a comprehensive tool tailored for public use. Foundational methods such as the Canny Edge Detector (Canny, OpenCV) establish a base for automated edge detection, providing clear, outlined images that make significant features more discernible. Vision Transformers (Ranftl et al.) contribute advances in dense image prediction, informing the depth estimation methods currently adopted in this ongoing work. This research also incorporates K-means clustering (Scikit-Learn) for color palette analysis, simplifying the visualization of landscape features, and integrating sketch-to-image capabilities from Stability AI models to facilitate real-time user engagement and modification. This approach underscores a shift toward developing geospatial tools that retain analytical power yet offer user-friendly interactions, making sophisticated data analysis more accessible. The fusion of these technologies highlights the balance between complex computation and intuitive design, inviting more diverse participation in geospatial analysis and planning.

Simplifying Geospatial Interaction through Automation

The platform’s processing pipeline currently focuses on transforming satellite images into simplified visual representations using K-means clustering. This method identifies and displays dominant color blocks, enabling users to recognize and understand land cover distributions more easily. Edge detection algorithms trace significant features, emphasizing natural and built elements for more precise visualization. Depth estimation models, such as those based on Intel DPT, render these visuals in 3D, offering users an immersive understanding of topographical variations. One of the most promising aspects of the platform is its interactive sketching feature. This feature allows users to draw potential modifications directly on images rendered by the platform using Stable Diffusion to generate realistic visual outputs. The platform’s modular design includes adjustable sliders for color count, depth scaling, and contour distance, ensuring that users of all experience levels—from novices to experts—can tailor the analysis to their needs. This adaptability supports broad participation, encouraging users to experiment and contribute insights to the planning process. Ongoing development will refine these features to enhance user experience further and expand participation.

Visualization as Public Engagement

The methods employed facilitate a dual-layered engagement model: users can interpret current landscapes and collaborate to project potential modifications. Early platform demonstrations produce interactive visuals, such as color-blocked images and 3D-rendered heightfields, allowing further exploration through serial contour plots. This approach seeks to align computational sophistication with public utility, effectively democratizing access to data interpretation tools that were previously restricted to professionals. The user-friendly interface is designed to transform passive information sharing into active participation, akin to a ‘digital post-it and marker’ exercise. Participants can sketch and test ideas in real-time, making community workshops more interactive and dynamic. This tool aims to foster richer conversations and a shared sense of agency by enabling iterative visual feedback. It allows communities to envision and refine their input into tangible visual representations that inform urban and environmental planning.

Toward Participatory Landscape Evolution

The platform described in this research represents an evolving model in satellite image processing, aiming to turn it into an interactive, participatory tool. This shift could significantly transform urban and environmental planning into a collaborative model. Integrating sketch-based AI outputs seeks to enable communities to engage in feedback loops that provide immediate, informed visual responses, supporting a more iterative design approach. The goal is to evolve from simple visualization tools to adaptive, interactive platforms where public participation extends beyond basic input into more detailed and complex planning contributions. Future development phases include incorporating real-time 3D models that reflect user-generated designs and bridging digital explorations with physical manifestations. This cycle—linking digital tools, interactive design, and material outputs—positions the platform as an enabler for more adaptive, resilient, and inclusive urban and environmental planning.

Canny Edge Detector, Canny, OpenCV script

Vision Transformers for Dense Prediction, Ranftl et al.

3D Terrain Modelling, Matplotlib Library

Palette Analyzer with K-means, Scikit-Learn Library

Sketch-to-Image tool, Stability AI

Neural Style Transfer, Gatys et al.

TorchGeo: deep learning with geospatial data, Stewart et al.

Fields of The World, Kerner et al.

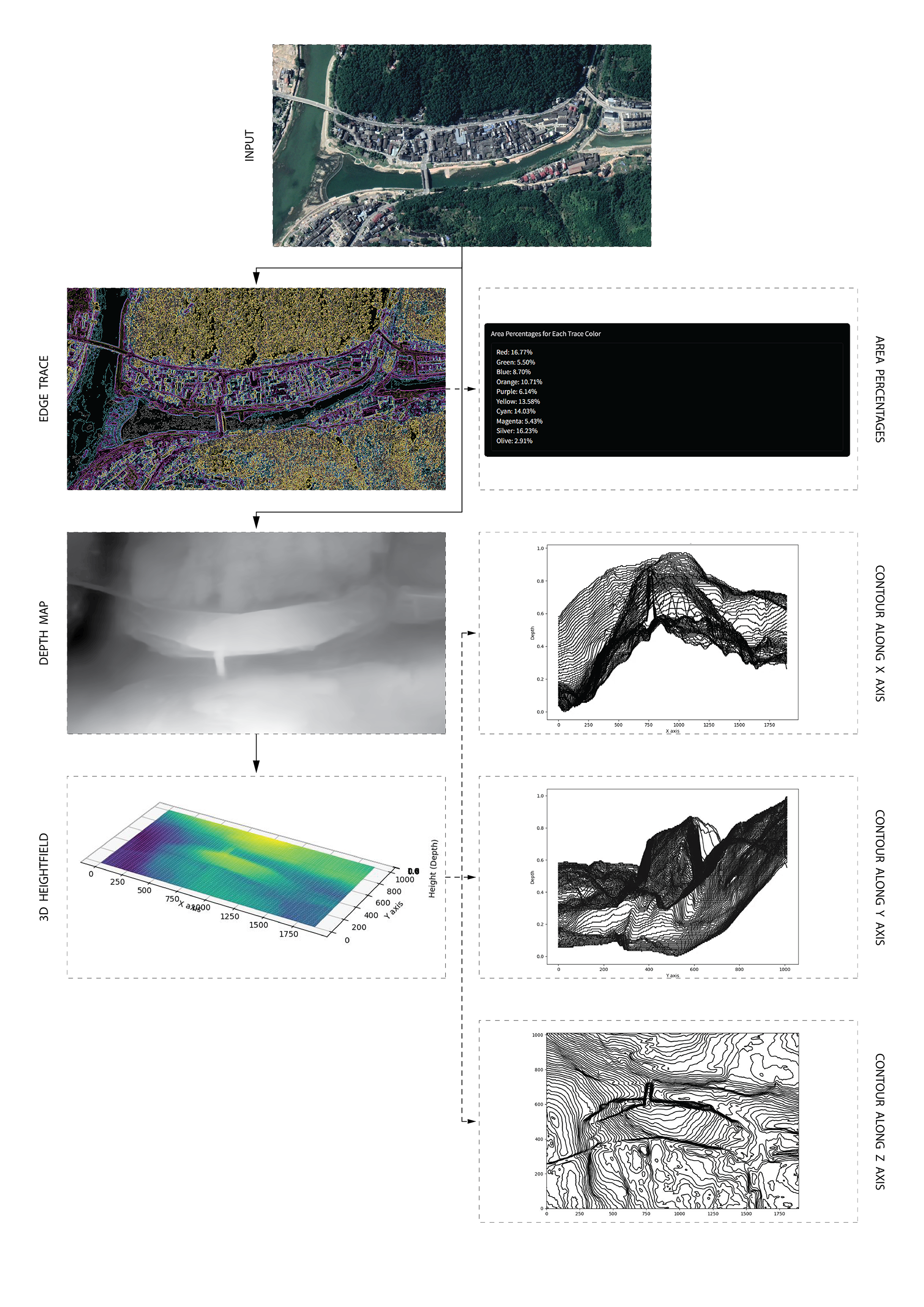

Geospatial Interactions:

Transforming Satellite Data for Public Use

A step-by-step workflow shows how a satellite image is processed through multiple stages

Panels illustrate outputs from various satellite images processed using the platform. Each panel demonstrates how users can customize outputs by adjusting color blocks, scaling, and contour distance

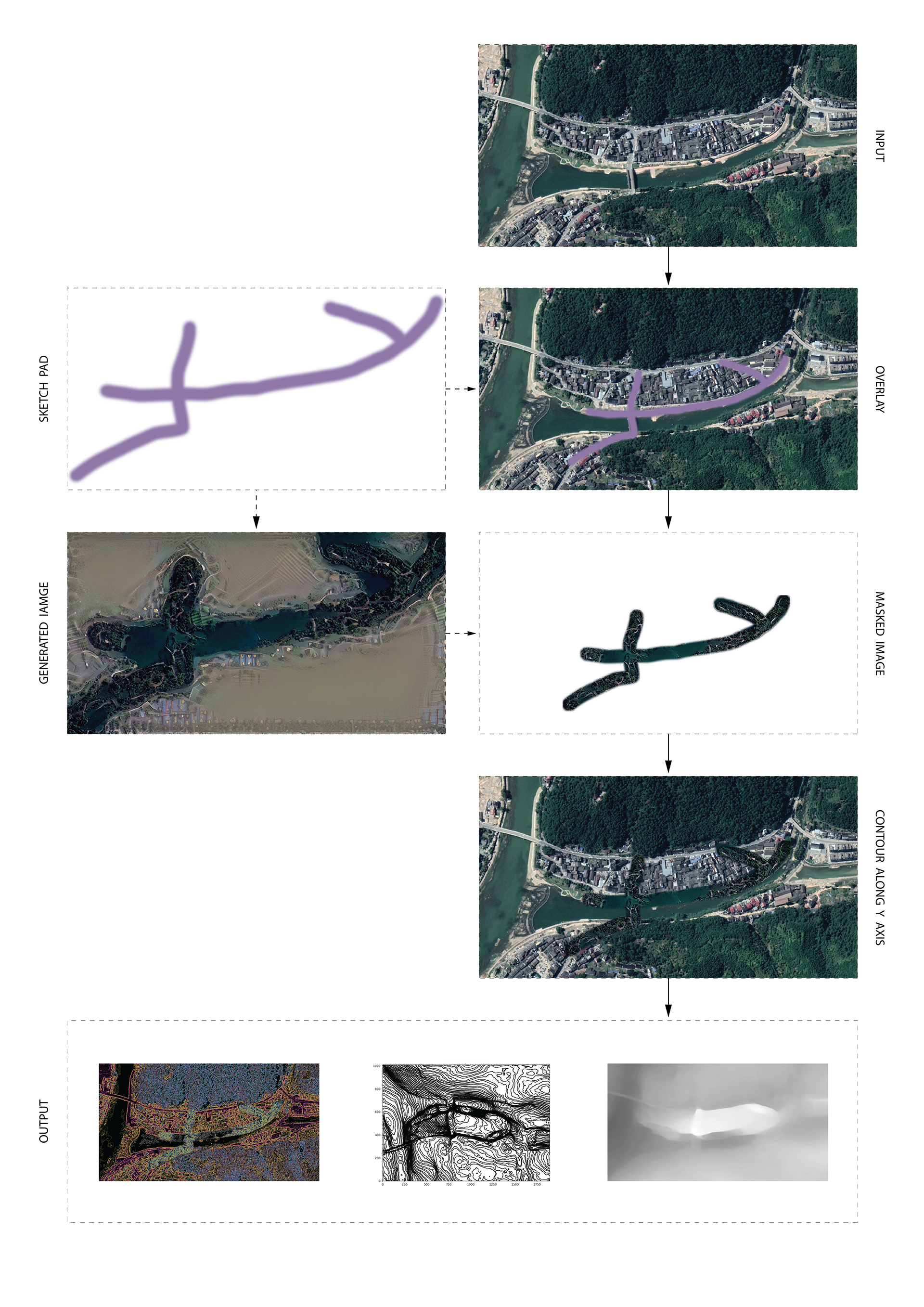

Speculative Transformations:

Transforming Sketches into Site Visualizations

Workflow designed to transform user sketches into detailed site images using tools like Stable Diffusion. Still in development, this process aims to quickly analyze and visualize speculative changes.

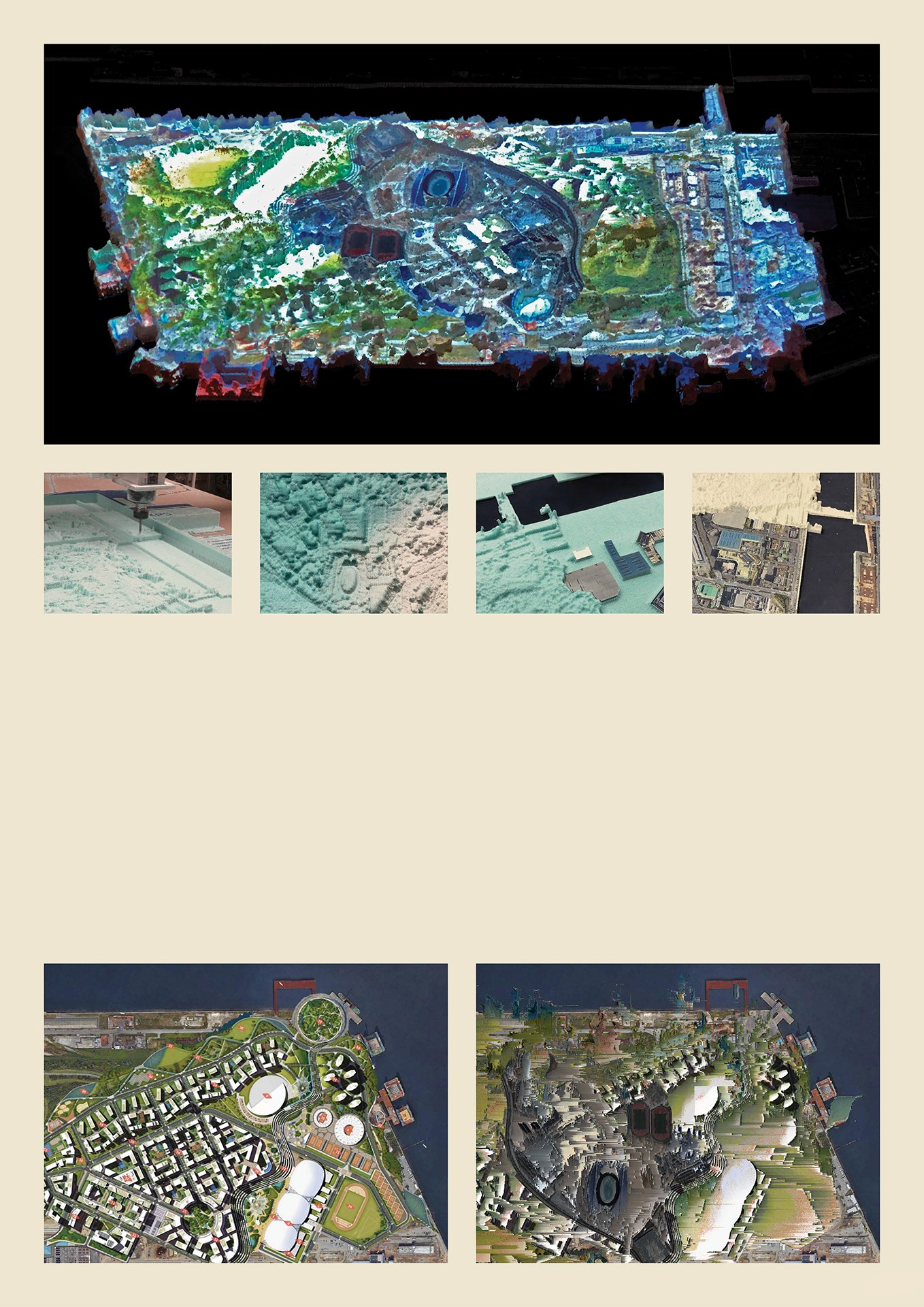

Above: CNC-milled physical model paired with digital projections to visualize speculative changes.

Below: The comparison of the existing master plan with a digitally processed speculative version.